Member-only story

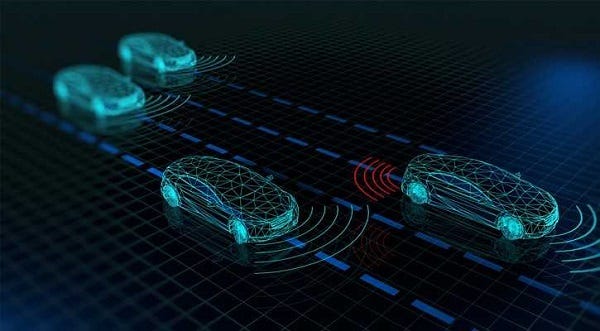

Multi-Sensor Data Fusion (MSDF) for Driverless Cars, An Essential Primer

Dr. Lance B. Eliot, AI Insider

A crucial element of many AI systems is the capability to undertake Multi-Sensor Data Fusion (MSDF), consisting of collecting together and trying to reconcile, harmonize, integrate, and synthesize the data about the surroundings and environment in which the AI system is operating. Simple stated, the sensors of the AI system are the eyes, ears, and sensory input, while the AI must somehow interpret and assemble the sensory data into a cohesive and usable interpretation of the real world.

If the sensor fusion does a poor job of discerning what’s out there, the AI is essentially blind or misled toward making life-or-death algorithmic decisions. Furthermore, the sensor fusion needs to be performed on a timely basis.

Humans do sensor fusion all the time, in our heads, though we often do not overtly put explicit thought towards our doing so. It just happens, naturally.

The other day, I was driving in the downtown Los Angeles area. There is always an abundance of traffic, including cars, bikes, motorcycles, scooters, and pedestrians that are prone to jaywalking. There is a lot to pay attention to. Is that bicyclist going to stay in the bike lane or decide to veer into the street?

I had my radio on, listening to the news reports, when I began to faintly hear the sound of a siren, seemingly off in the distance. As I strained to try and hear a siren, I also kept my eyes peeled, anticipating that if the siren was occurring nearby, there might be a police car or ambulance or fire truck that might soon go skyrocketing past me.

I decided that the siren was definitely getting more distinctive and pronounced. The echoes along the streets and buildings was creating some difficulty in deciding where the siren was coming from. I could not determine if the siren was behind me or somewhere in front of me.

At times like this, your need to do some sensor fusion is crucial.

Your eyes are looking for any telltale sign of an emergency vehicle. Maybe the flashing lights might be seen from a distance. Perhaps other traffic might start to make way for the emergency vehicle, and that’s a visual clue that the vehicle is…